Device plugins in Kubernetes (k8s) are used to enable the utilization of specialized hardware resources by containerized applications. They allow Kubernetes to be extended to support additional types of hardware, beyond what is supported natively.

Like other Kubernetes plugins, this makes Kubernetes more flexible and adaptable to a wide range of infrastructure and application requirements.

K8s dev ops teams have recently become more familiar with device plugins thanks to the sudden surge in demand for GPUs in k8s clusters to run AI enabled applications. Yet, device plugins’ usefulness can go well beyond just GPU workloads. Below we will talk about how device plugins can enable exposure of all types of hardware and make the use of k8s in IoT and Edge applications much easier and more secure.

Key Aspects of Device Plugins

nodeSelector in your deployment.

Device plugins are privileged pods and run in the kube-system namespace of the cluster.

Device Plugins spend their lives looking at hardware and then talking to kubelet over a gRPC channel. The major tasks are:

- Initialize certain hardware on the node

- Starts a gRPC service listening on a Unix socket. The gRPC service must implement the

DevicePluginservice specification. This is the channel used to talk to kubelet. - Registration: Upon startup the plugin must inform kubelet of its existence. And if kubelet goes down or on reboot, it needs to always re-register.

- Runs the gRPC service. The service tells kubelet if hardware can be allocated for a pod requiring it and provides information to kubelet which may be required for the container runtime to provide access to the hardware to the pod.

- Runtime configuration: In some cases, the plugin may directly configure the runtime by setting a

PostStartorPreStophook. These are typically used to setup or reset hardware before the container’s application uses it. Also, a device plugin deployment might include aninitContainerwhich is a container included in the deployment but which runs just once and then shuts down, while the rest of the pod continues to run. Such a container might be used to initialize hardware one time before pod use. Various other techniques can be used to initialize hardware as well; for instance, the plugin could just directly talk to the hardware or interface through it with an installed driver. But a well-designed plugin should fit into the general design philosophy of k8s.

More details on this process are in the k8s documentation.

Users can select pods with devices by using a nodeSelector or toleration in their pod spec.

For instance, this deployment requests that a pod deploy on a node with a key of nvidia.com/gpu:

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

restartPolicy: Never

containers:

- name: cuda-container

image: nvcr.io/nvidia/k8s/cuda-sample:vectoradd-cuda10.2

resources:

limits:

nvidia.com/gpu: 1 # requesting 1 GPU

tolerations:

- key: nvidia.com/gpu

operator: Exists

effect: NoSchedule

Through the gRPC AllocateResponse message the device plugin will provide kubelet with information on how to access the device. This information is provided on runtime startup in order for specific devices to be exposed. The most common tasks here are providing paths on the host Linux system, such as to unix sockets, device files, shared memory, or driver-created special device files which allow the container runtime to include these paths in the pod’s walled-off garden runtime when started. If you are using a virtualization runtime, such as Kata containers, there may be more steps needed.

Exposing hardware beyond GPUs

Most information on the web discusses device plugins on k8s in relation to deploying workloads on Nvidia (CUDA) and AMD GPUs.

However, device plugins can, theoretically, be used to solve lots of hardware assignment issues for nodes, for everything from UART and SPI ports to purpose-built ASIC accelerators to industrial high-speed interconnects. Using either generic (we will discuss some projects below) or purpose-built device plugins can free development and dev ops teams from having to track a growing list of hardware revisions and device assignment differences across a fleet of edge devices.

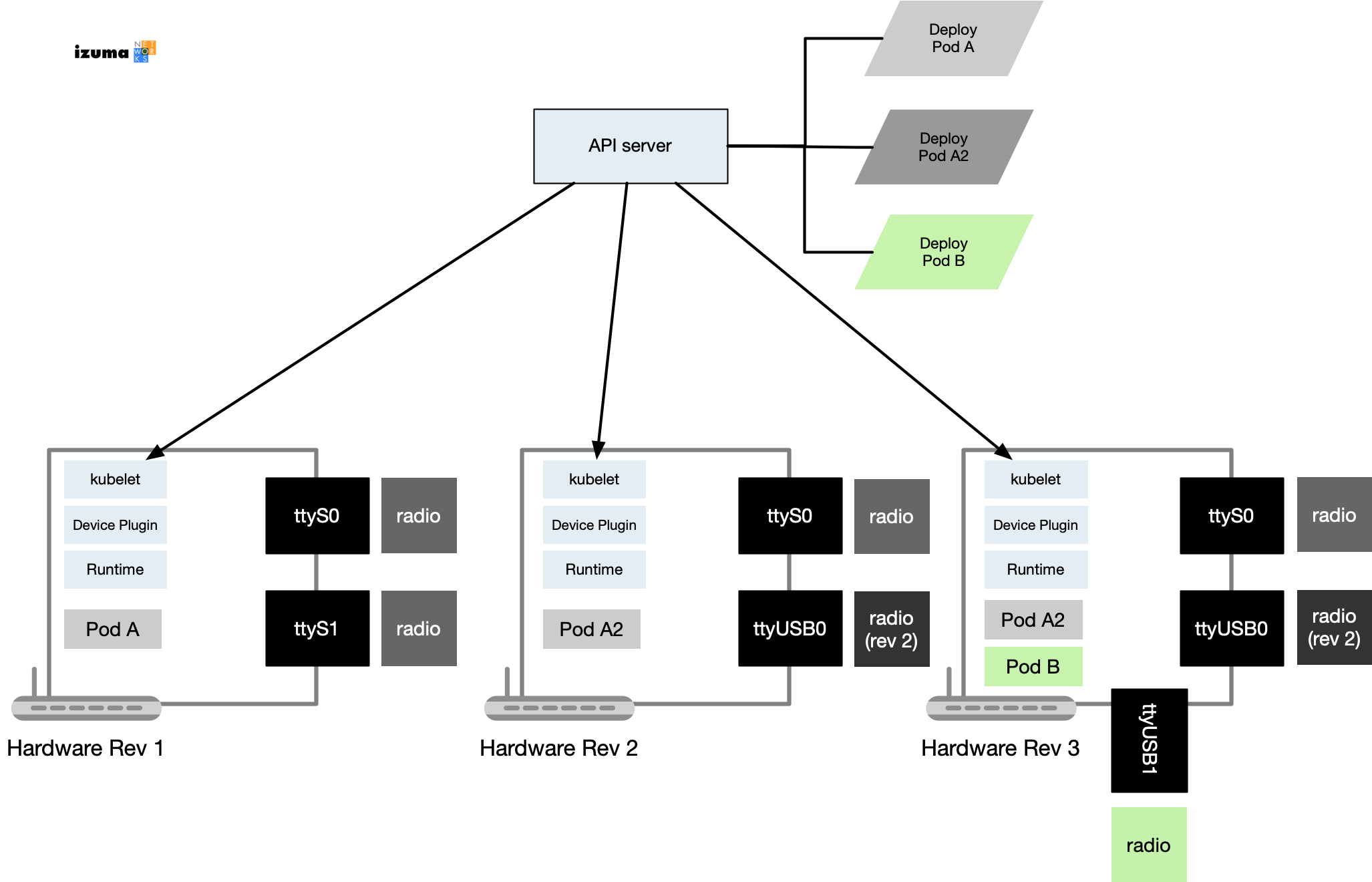

Let’s take a hypothetical example… Let’s say you have three versions of an IoT gateway in production.

- The first version used traditional UARTs talking to two radios.

- The second hardware revision used a traditional UART and a UART over USB talking to an upgraded radio.

- In the third version, the team added a new radio, for a new type of protocol. The other two radios remained the same as rev 2.

There are many ways to abstract the hardware changes like this. But most of the ways outside of Kubernetes require operations teams to keep close track of the exact software versions deployed on each piece of hardware. They might also require the team’s software to be a bit smarter, for instance, it might need to probe udev for new devices. Or it might need to just enumerate the serial ports, but then query them somehow to see what hardware was on them.

Whatever the concept, you can place this logic in the main software stack. Taking this approach means that any time you have a new radio or new protocol you need to update your deployed applications. And to keep things simple you might just tell field operations to update everything.

Using k8s with device plugins, the approach could be simpler during upgrades to software and hardware. The result is touching deployed boxes less during a software upgrade. i.e. only upgrading or deploying code to machines that actually need the software.

If each machine has a device plugin that understands your hardware layout, the plugin can tell the k8s cluster which nodes have which types of radios. (for the plugin might enumerate USB and look at the idVendor:idProduct, or similar methods)

In turn, operations teams simply need to upgrade pod deployments and specify in the deployment the type of hardware required. K8s will know, through the device plugin information, which containers should go to which devices. Such an approach is even more useful when, as is often the case, hardware can be reconfigured in the field - by either your own FAEs or your customers.

Generic Device Plugins

At this point, you are probably thinking - well, serial ports or anything else exposed by udev is a pretty common thing. Surely there are some projects that provide a plugin that can generically see hardware differences. Here are a few…

Akri

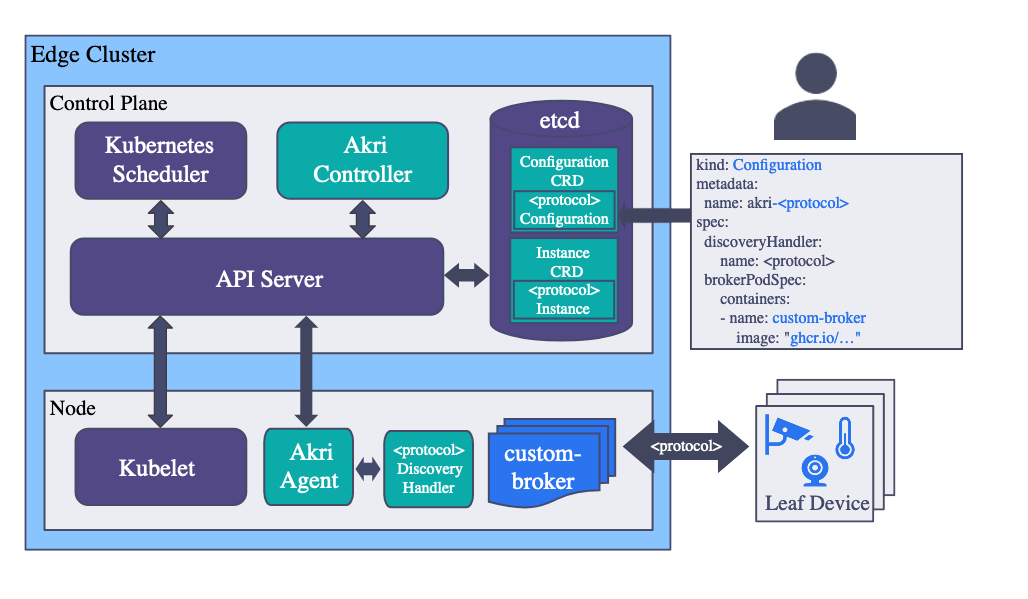

Arki terms itself a “Kubernetes Resource Interface.” Akri is essentially a universal device plugin with a robust service behind it which is deployed in the cloud part of your Kubernetes edge design. Akri can auto-deploy pods (which it terms “brokers”) when it sees a certain kind of device, as well as remove the pod when the device vanishes. Obviously, these pods would handle the protocol and work of talking to the device itself and somehow make use of the device in your design. Devices are “seen” by Discovery Handlers - which cover a specific protocol / discovery method. Akri ships with three Discovery Handlers: the udev handler plus OPC-UA (industrial devices usually) and ONVIF (for IP cameras) handlers.

Other Discovery Handlers can be created and fit into Akri. The system is designed to be extensible. For details on the architecture read the detailed explanations.

Upsides

Significant thought has been put into Akri and it seems well designed but not overly complex. If you are trying to connect IP cameras or OPC-UA devices you should visit Akri’s docs and take a look in depth at what it does to see if it will fit your needs.

For devices seen in udev it will depend on your specific use case.

Akri’s developers are very active (this article was published in Oct 2023). The code is modern also, almost all of it is written in Rust.

Considerations

Like any OSS project, you will be tying yourself to another project and the people behind it, who will only spend as much time as their employers allow them. It has ~1k stars today - which is not huge but respectable. And while Arki’s development team is active its main contributors are confined to a very small group.

The project is a CNCF Sandbox project. However bear in mind CNCF has almost 200 projects, and 109 sandbox projects (as of Oct 2023).

generic-device-plugin

Aptly named generic-device-plugin is exactly that. This is an early / not popular project (but active) - and may be worth a look depending on your use case. Unlike Akri, it doesn’t attempt to auto-deploy pods. Instead, it allows the deployment of pods based on a resource limit the plugin provides to the API server. If Akri seems a bit heavy-weight for your design, then definitely look at this project.

This project is written in Go as well, which may be better for your team than Rust.

The README of the project explains it succinctly enough.

Bear in mind using this one will require more work and customization from your dev team. That being said, it may be a good start for your own customer device plugin as well.

smarter-device-manager

This one is hiding in Gitlab - and it’s not actively maintained (last real work 3 years ago). However, if you need another take on a design - consider browsing the project. Interestingly, smarter-device-manager allows containers to simultaneously access devices. Not sure how well that would really work in practice. And it is also written in Go.

Generic or Custom Device Plugin?

So should your team try to use these projects, or just go write your own?

If you have a simple setup, where it’s easy to find the different hardware your machines may have by just looking at udev or the filesystem, the above projects might work standalone.

The challenge is always the edge cases.

No pun intended.

In the end, it will depend on your situation, and specifically how much custom setup or device querying is needed for your hardware. Going back to the earlier example use case, if you have radios hanging off an FTDI USB-to-UART chip, and that chip always looks the same in udev (regardless of what’s behind it) then your solution may be simpler if you just write your own plugin. Then, right inside the plugin, do the hardware query steps needed to determine your radios - perhaps through a command any radio you ship has built-in.

A hybrid approach is to fork one of these projects while continuing to keep up with upstream changes as available. In the case of Akri, consider using their extensible framework to build a “device plugin.”

Good luck and let us know if we can help in edge Kubernetes deployments!

Learn more about Izuma Edge-as-a-Service